Data and Databases ~ Concise and Simple

(As with all "concise and simple" articles I assume no prior knowledge of the subject and keep the length to less than 1500 words.)

"Scientia potentia est." (Knowledge is power.)

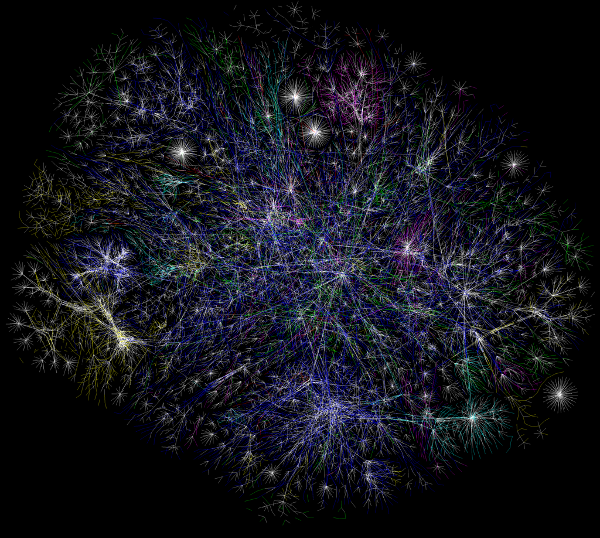

In the digital world knowledge is stored, in its latent form, as data in databases. Everyone from your local supermarket to the government collects data about all of us. Information and knowledge is extracted about all aspects of our lives, from our shopping habits to whether we've paid our taxes (it is no accident that the examples above relate to extracting money from us). According to Google it currently takes just two days for us to generate as much information as was created by all of humanity from the dawn of time until 2003.

This article is about data and databases - the technology that makes the accumulation and analysis of this flood of information possible. It starts with a simple question: what is data?

"Data" are values that convey meaningful information about something. Meaning is implied by the context and structure of the data. Structure is formed from the way data is represented. Databases simply store and provide mechanisms for working with structured data.

The most fundamental data-related act is to name a value:

my_name = "Nicholas Tollervey"My name is Nicholas Tollervey. This is shown by

"my_name" being assigned the value "Nicholas Tollervey".

Assignment happens when a token name is followed by an equals

sign that is, in turn, followed by a value. Notice the three

layers: meaning, structure and representation.

Another example:

nicholas = {

"first_name": "Nicholas",

"middle_name": "Harry",

"surname": "Tollervey",

"birthday": {

"year": 1973,

"month": 8,

"day": 19

}

"height": 180,

"weight": 76.9

}After reading the above I'm certain you'll know when to send me a birthday

card. Something called nicholas has a single value associated with

it: an

associative

array. Associative arrays are key / value pairs - like a

dictionary of words or a subject index at the back of a book. In this case it

has six entries, one of which is itself a nested associative array with three

entries. It's an associative array because it is enclosed by

curly braces and contains a list of "key_name": value pairs

separated by commas. The associative array is assigned to nicholas

in exactly the same way as the first example (token name followed by

equals followed by value).

Incidentally, I can retrieve my data like this:

>>> my_name

"Nicholas Tollervey"

>>> nicholas["height"]

180(This example uses the Python

programming language: I type in commands after the >>>

prompt and the Python interpreter replies on the new line.)

A final example:

| id | first_name | middle_name | surname | nationality_id |

|---|---|---|---|---|

| 1 | Nicholas | Harry | Tollervey | 2 |

| 2 | Terry | Charles Oliver | Jones | 1 |

| 3 | Esteve | Fernandez | 3 |

| id | name |

|---|---|

| 1 | Australian |

| 2 | British |

| 3 | Spanish |

Obviously, I'm British and my buddies

Terry and

Esteve are

Australian and Spanish (respectively). You know this because you understand how

to read tabular information and spot the relationship between the values in the

nationality_id column of the People table and the values

in the id column of the Nationalities table. You know

it's tabular information because it's a two dimensional grid with the

vertical columns indicating named fields and horizontal rows representing

individual records. Once again: meaning, structure, representation.

Each example is more complex than the one preceding it. The first is a

single key / value pair (where my_name is the key and

"Nicholas Tollervey" is the value), the second is a key

(nicholas) associated with a value that is itself key / value

pairs (the associative array). Finally, the third example contains rows of

items that all share the same keys (a.k.a. columns) but different values. Each

type of record (people, nationalities etc) has its own table.

How do such meaningful data structures / representations relate to databases?

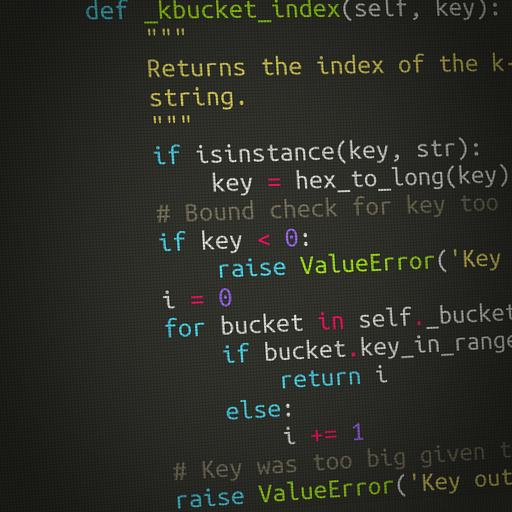

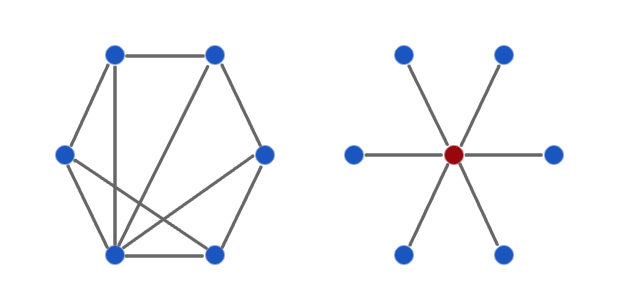

There are four basic actions that all databases may perform on data: create, read, update and delete (often expressed as CRUD). There are many types of database that meet this minimum functionality. The three data examples illustrate the types of data structure stored in three of the most popular types of database: key / value stores (such as Redis or Memcached), document oriented databases (like MongoDB or CouchDB) and relational databases (for example, Postgres or MySQL).

Key / value databases are, at heart, associative arrays. They provide three basic actions:

- Assign a value to a key (both creating and updating).

- Read a value associated with a known key.

- Delete a value using a known key.

Such simple databases are extremely fast and well suited for tasks where speed is of the essence. However, there isn't a simple way to search the stored values and keys must be known before being used to retrieve or delete associated values. Nevertheless, they are extremely useful as caches of arbitrary values. If you want to try such a database you should visit the try redis tutorial tutorial ~ a gentle yet comprehensive tour of a popular key / value store.

Document oriented databases store arbitrarily structured documents expressed as associative arrays. Every document has a unique ID created by the database and used as a key to reference the document.

However, documents are not opaque values as in key / value databases. Rather, it is possible to reach in to the documents and construct queries that reference the document's named attributes (keys). For example, given a people database I could create a query to retrieve all documents that have a height attribute with a value greater or equal to 180. Since the structure of the documents is arbitrary, such queries rely on conventions (all people documents have a height attribute whose value is expressed in centimetres).

Because document structure is not fixed (except by convention), new fields

can be added at any time and document oriented databases specify default

handling for missing fields - usually they'll evaluate to null

(unknown).

To play with a document oriented database, explore the MongoDB tutorial on the project's homepage.

Relational

databases developed from the work of

Edgar Codd. They store

data in rows

(tuples) that share the same

columns

(attributes)

defined in tables

(relations) -

bracketed words are the formal terminology for the colloquially named

concepts. Each row contains a special field called the

primary key (the

id column in the example) that uniquely identifies the record.

Tables, representing different sorts of things, are related to each other with

special fields called

foreign keys that

reference primary keys of rows in different tables (the

nationality_id). Many relational databases also enforce

constraints such as

referential

integrity - where foreign keys may not refer to non-existent primary

keys.

To work efficiently, data in relational databases should be normalised to avoid duplication. Data need only be stored in one table representing a specific type of thing. Therefore, modifications only happen once and are propagated via the relationships between tables. For example, the nationalities table is the only place where nationalities may be defined. Tables for things with nationalities (such as people) need only reference the single nationalities table via a foreign key rather than duplicate such information. The process of normalisation usually has three steps of increasing efficiency: first, second and third normal forms. Sometimes it is necessary to de-normalise a database for performance reasons - it's faster to retrieve a single row than it is to retrieve several related rows from different tables.

Structured Query Language (SQL) is the programming language used by relational databases to work with data. Based upon relational algebra, it provides CRUD functionality and capabilities for specifying the structure and relationships between data. It's easy to create powerful queries:

SELECT people.first_name, people.surname, nationalities.name

FROM people

JOIN nationalities ON nationalities.id = people.nationality_id

WHERE people.surname = "Jones"The result of which is:

| people.first_name | people.surname | nationalities.name |

|---|---|---|

| Terry | Jones | Australian |

The query asks: give me the first name, surname and nationality (line 1) of people whose surname is "Jones" (line 4). We have to specify where to collect this data (line 2) and how to join the related tables together (line 3). Notice that SQL doesn't specify how to run the query but describes what the expected result should be.

Interactions with a database happen as transactions. Transactions can contain many different commands and should have several desirable properties:

- Atomicity - transactions are an all-or-nothing affair that cannot be abandoned in an incomplete state.

- Consistency - transactions fail if they attempt to put the database in to an invalid state such as breaking referential integrity or some other constraint.

- Isolation - each transaction is independent of and cannot interfere with any of the others.

- Durability - once complete a transaction's effect will remain despite catastrophic events like power failure, crashes or errors.

A database is ACID compliant if its transactions meet these standards.

The pace of change in data-based technology is fast. Relatively recent trends, such as big data (huge data sets and the technology needed to manage them), deserve their own article. Yet, even the technology described above allows companies and governments to build frighteningly accurate pictures of our lives (how do you think Amazon is able to make such useful suggestions?).

Personally, I find the ubiquitous analysis and measurement of our behaviour inadvertently Orwellian. Furthermore, there is a tendency to trust the results obtained from databases without recognizing the meaning level of data relies on interpretation (of which there are many varieties). Nevertheless, there are great opportunities to do good and I am reminded of the Buddhist proverb,

"To every man is given the key to the gates of heaven; the same key opens the gates of hell."

I hope we choose the right gate.

1499 words (not including code examples).